When 512×512 is not Enough: Local Degradation-Aware Multi-Diffusion for Extreme Image Super-Resolution

Abstract

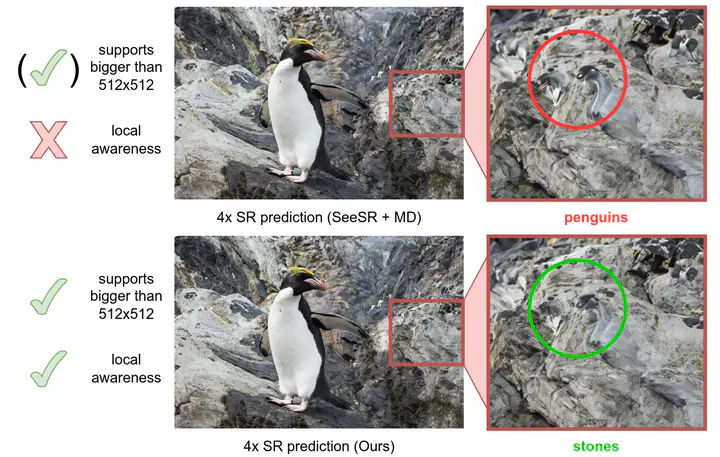

Large-scale, pre-trained Text-to-Image (T2I) diffusion models have gained significant popularity in image generation tasks and have shown unexpected potential in image Super-Resolution (SR). However, most existing T2I diffusion models are trained with a resolution limit of 512x512, making scaling beyond this resolution an unresolved but necessary challenge for image SR. In this work, we introduce a novel approach that, for the first time, enables these models to generate 2K, 4K, and even 8K images without any additional training. Our method leverages MultiDiffusion, which distributes the generation across multiple diffusion paths to ensure global coherence at larger scales, and local degradation-aware prompt extraction, which guides the T2I model to reconstruct fine local structures according to its low-resolution input. These innovations unlock higher resolutions, allowing T2I diffusion models to be applied to image SR tasks without limitation on resolution.

Citation

If you use this information, method or the associated code, please cite our paper:

@INPROCEEDINGS{Moser2025ZiDo,

author={Moser, Brian B. and Frolov, Stanislav and Nauen, Tobias and Rauev, Federico and Dengel, Andreas},

booktitle={2025 IEEE International Conference on Image Processing (ICIP)},

title={When 512×512 is Not Enough: Local Degradation-Aware Multi-Diffusion for Extreme Image Super-Resolution},

year={2025},

volume={},

number={},

pages={1223-1228},

keywords={Training;Measurement;Image synthesis;Superresolution;Text to image;Coherence;Diffusion models;Image restoration;Image Super-Resolution;Text-to-Image Synthesis;Diffusion Models},

doi={10.1109/ICIP55913.2025.11084710}}