Abstract

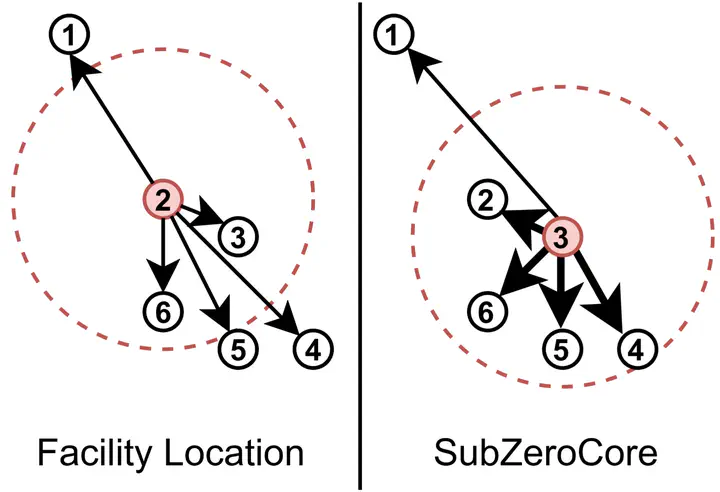

The goal of coreset selection is to identify representative subsets of datasets for efficient model training. Yet, existing approaches paradoxically require expensive training-based signals, e.g., gradients, decision boundary estimates or forgetting counts, computed over the entire dataset prior to pruning, which undermines their very purpose by requiring training on samples they aim to avoid. We introduce SubZeroCore, a novel, training-free coreset selection method that integrates submodular coverage and density into a single, unified objective. To achieve this, we introduce a sampling strategy based on a closed-form solution to optimally balance these objectives, guided by a single hyperparameter that explicitly controls the desired coverage for local density measures. Despite no training, extensive evaluations show that SubZeroCore matches training-based baselines and significantly outperforms them at high pruning rates, while dramatically reducing computational overhead. SubZeroCore also demonstrates superior robustness to label noise, highlighting its practical effectiveness and scalability for real-world scenarios.

Citation

If you use this information, method or the associated code, please cite our paper:

@misc{moser2025subzerocore,

title = {SubZeroCore: A Submodular Approach with Zero Training for Coreset

Selection},

author = {Brian B. Moser and Tobias C. Nauen and Arundhati S. Shanbhag and

Federico Raue and Stanislav Frolov and Joachim Folz and Andreas

Dengel},

year = {2025},

eprint = {2509.21748},

archivePrefix = {arXiv},

primaryClass = {cs.LG},

url = {https://arxiv.org/abs/2509.21748},

}